The New Standard for LLM Agents: A Comprehensive Guide of MCP

TL;DR

- MCP (Model Context Protocol) is a protocol for LLM agents to connect to various data sources (content, tools, etc.).

- Purpose: Standardize connections and simplify the management of AI systems.

- Difference from Tools: MCP is a protocol; tools are functionalities exposed through MCP.

- Previous Problem: Connecting LLMs to different data sources required custom implementations.

- Benefits of MCP: As a standardized protocol, it allows connection to various data sources without custom implementations (similar to REST APIs).

- Architecture:

- MCP Client: LLM agents or applications (e.g., Claude Desktop, IDEs).

- MCP Server: Lightweight programs that connect to specific data sources or tools and expose their functionalities.

- What MCP Servers Can Do:

- Tools: Expose executable functions to clients (e.g., calculations, searches).

- Resources: Expose data and content.

- Prompts, Sampling, and Roots (currently, Tools and Resources are mainly used).

- Current Status: A new technology announced in November 2024, still under development. Primarily used for tool and resource access.

- Future Potential: Likely to develop significantly as the popularity of agents grows.

What is MCP?

MCP (Model Context Protocol) is a protocol designed to allow LLM agents to connect to various data sources, such as content and tools. It aims to simplify the management of AI systems by standardizing connections with a single protocol. Since its announcement by Anthropic in November 2024, MCP has been utilized in LLM-based applications like Cursor, playing a vital role in the evolution of LLM agents.

The Problem MCP Solves (Difference from Tools)

I first learned about MCP through Cline (an open-source version of Cursor). Initially, I thought, "Why give it such a complicated name? Isn't this just another tool?" However, a closer examination revealed that MCP is a protocol, distinct from a tool.

Let's consider two scenarios:

-

Person A is developing an LLM agent application using LlamaIndex. They want to use tools available in LangChain, but they're unsure if it's possible and need to investigate.

-

Person B is developing an LLM agent application in TypeScript. They need a search tool, but the LangChain Python library has a

duckduckgosearch tool, which they can't use due to the language difference.

Traditionally, connecting LLMs to different data sources like these required individual custom implementations. However, with a standardized protocol like MCP, connecting to various data sources is easy and doesn't require custom implementations (it's very similar to REST APIs).

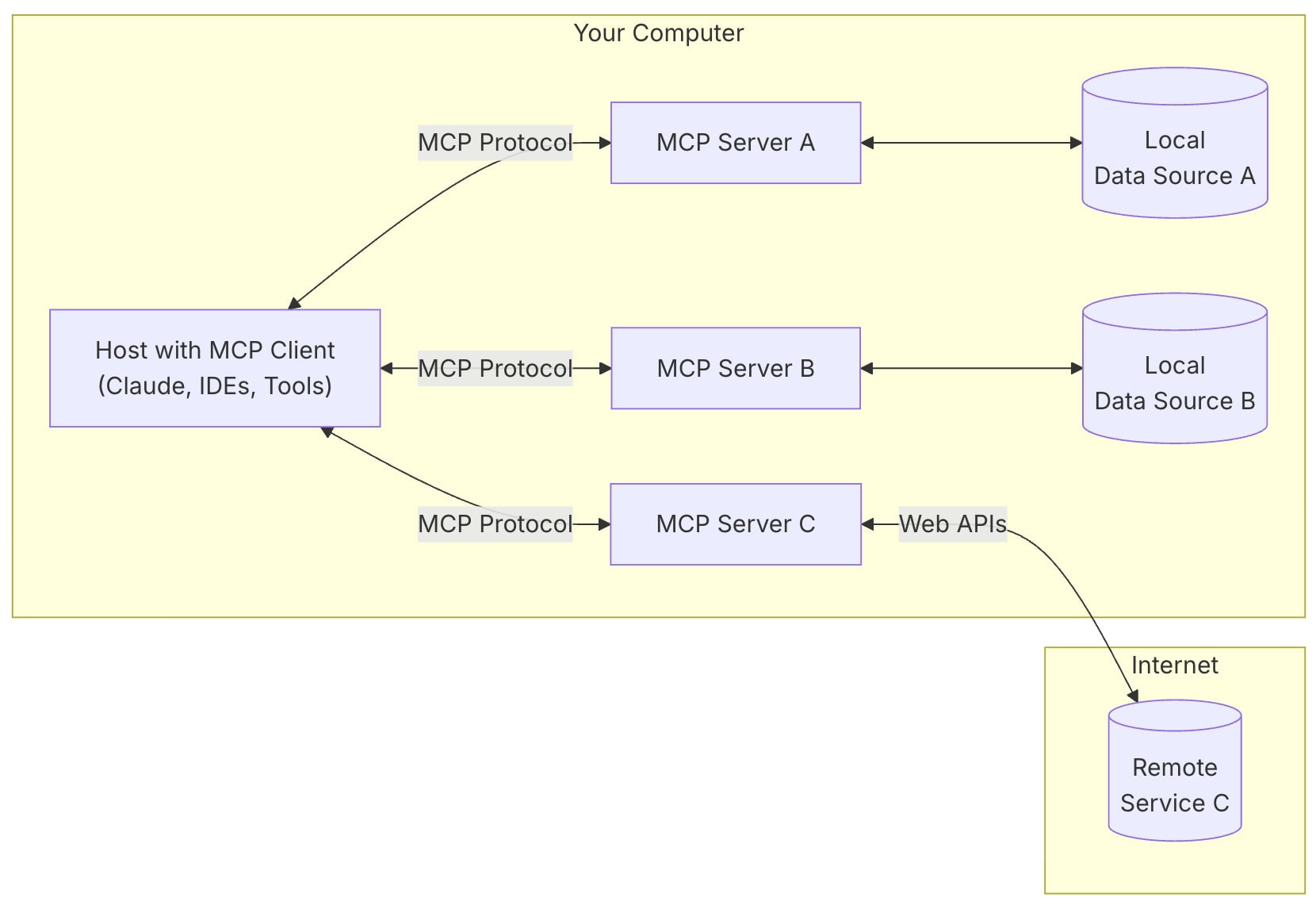

MCP Architecture

MCP adopts a client-server architecture:

-

MCP Client: LLM agents or applications that use MCP to access data (e.g., Claude Desktop, IDEs, AI tools).

-

MCP Server: Lightweight programs that connect to specific data sources or tools and expose their functionalities through MCP (e.g., file systems, databases, web services).

A host can connect to multiple servers, each providing access to different data sources. This design allows AI to access diverse data without custom integrations. The protocol is based on JSON-RPC, making it compatible with many programming languages.

Building an MCP Server in Practice

Since MCP is a protocol, you can build and access servers regardless of the programming language. However, it's still under development, and the currently supported languages (with available SDKs) are Python, TypeScript, Java, and Kotlin. This example demonstrates implementation in Python.

Environment Setup

This example uses uv for Python package management. First, install it:

curl -LsSf https://astral.sh/uv/install.sh | shNext, restart your terminal and set up the Python environment:

# Create a new project directory

uv init calculator

cd calculator

# Create and activate a virtual environment

uv venv

source .venv/bin/activate

# Install dependencies

uv add "mcp[cli]"

# Create the server file

touch calculator.pyImplementing the Server Logic

Write the following script into calculator.py. This creates an MCP server with a tool that calculates a mathematical expression and returns the result:

from typing import Any

from mcp.server.fastmcp import FastMCP

mcp = FastMCP("calculator")

@mcp.tool()

def calculator(expression: str) -> Any:

"""Calculate a mathematical expression.

Args:

expression: Mathematical expression to evaluate

"""

result = eval(expression)

return result

if __name__ == "__main__":

# Initialize and run the server

mcp.run(transport="stdio")This is quite similar to creating a tool in LangChain with Python and serving it with FastAPI.

Accessing the MCP Server

Connection

The following code connects to the server. The environment is arbitrary, so this example uses a Jupyter notebook:

from contextlib import AsyncExitStack

from mcp import ClientSession, StdioServerParameters

from mcp.client.stdio import stdio_client

exit_stack = AsyncExitStack()

command = "python" # Because the server code was written in Python

server_script_path = "calculator.py" # Path to the server code

server_params = StdioServerParameters(

command=command, args=[server_script_path], env=None

)

stdio, write = await exit_stack.enter_async_context(stdio_client(server_params))

session = await exit_stack.enter_async_context(ClientSession(stdio, write))

await session.initialize()This code includes elements like StdioServerParameters, but you don't need to understand their inner workings; just use them as shown.

The connection information needs the script language and server_script_path because, currently, connecting to MCP servers over a network is still under development. MCP servers only operate locally at this time. To run the server locally, the client internally executes the server script differently based on the language. For Python, it uses uvx; for TypeScript, it uses npx.

Request

Let's execute a request. First, check what tools are available on the server:

response = await session.list_tools()

tools = response.tools

print(tools[0].model_dump_json(indent=4)){

"name": "calculator",

"description": "Calculate a mathematical expression.\n\n Args:\n expression: Mathematical expression to evaluate\n ",

"inputSchema": {

"properties": {

"expression": {

"title": "Expression",

"type": "string"

}

},

"required": [

"expression"

],

"title": "calculatorArguments",

"type": "object"

}

}The details of the calculator tool are displayed.

If this tool information is sent to the LLM, the LLM can call and use the tool based on a user's request. For example, if the LLM receives the question, "What is the result of 4**2?", it can use the tool to calculate it (the LLM interaction is omitted for brevity). You can request the MCP server and obtain the result as follows:

tool_name = "calculator"

tool_args = {"expression": "4**2"}

result = await session.call_tool(tool_name, tool_args)

resultCallToolResult(meta=None, content=[TextContent(type='text', text='16', annotations=None)], isError=False)Passing the desired tool and parameters to the server returns the result. The LLM can then use this result to respond to the user.

Features Beyond Tools

Here's a summary of what else can be done with MCP servers besides using tools:

-

Resources: Resources are core primitives of MCP, enabling servers to expose data and content. Clients can read them and use them as context for interactions with the LLM.

-

Prompts: Prompts allow servers to define reusable prompt templates and workflows. Clients can easily present these to users or LLMs. Prompts provide a powerful way to standardize and share common interactions with LLMs.

-

Tools: Tools allow servers to expose executable functions to clients. Through tools, LLMs can interact with external systems, perform calculations, and take actions.

-

Sampling: Sampling allows servers to request LLM completions through clients, enabling advanced agentic behavior while maintaining security and privacy.

-

Roots: Roots define the boundaries within which a server can operate. They provide a way for clients to inform the server about related resources and their locations.

While there are five features, they are mostly used for tool usage and resource access at present. Below is a summary of the features supported by each client.

| Client | Resources | Prompts | Tools | Sampling | Roots | Notes |

|---|---|---|---|---|---|---|

| Claude Desktop App | ✅ | ✅ | ✅ | ❌ | ❌ | Full support for all MCP features |

| 5ire | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools. |

| BeeAI Framework | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools in agentic workflows. |

| Cline | ✅ | ❌ | ✅ | ❌ | ❌ | Supports tools and resources. |

| Continue | ✅ | ✅ | ✅ | ❌ | ❌ | Full support for all MCP features |

| Cursor | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools. |

| Emacs Mcp | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools in Emacs. |

| Firebase Genkit | ⚠️ | ✅ | ✅ | ❌ | ❌ | Supports resource list and lookup through tools. |

| GenAIScript | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools. |

| Goose | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools. |

| LibreChat | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools for Agents |

| mcp-agent | ❌ | ❌ | ✅ | ⚠️ | ❌ | Supports tools, server connection management, and agent workflows. |

| Roo Code | ✅ | ❌ | ✅ | ❌ | ❌ | Supports tools and resources. |

| Sourcegraph Cody | ✅ | ❌ | ❌ | ❌ | ❌ | Supports resources through OpenCTX |

| Superinterface | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools |

| TheiaAI/TheiaIDE | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools for Agents in Theia AI and the AI-powered Theia IDE |

| Windsurf Editor | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools with AI Flow for collaborative development. |

| Zed | ❌ | ✅ | ❌ | ❌ | ❌ | Prompts appear as slash commands |

| [OpenSumi] | ❌ | ❌ | ✅ | ❌ | ❌ | Supports tools in OpenSumi |

Conclusion

To reiterate, MCP (Model Context Protocol) is a protocol for facilitating communication between LLMs and resources. What is often referred to as MCP on social media often refers to MCP servers. It was proposed in November 2024 and is a very new technology, only four months old. It has features such as tools and resources, but it is still under development and not yet fully utilized. However, given the current interest in agents, it is likely that the currently available tool functionality will be largely replaced by MCP servers.